Preparing for regulation how to overcome four barriers to AI in healthcare

Preparing for regulation how to overcome four barriers to AI in healthcare

.png)

In this opinion piece, Cal Al-Dhubaib (Pandata; OH, USA) explores how we can overcome the ethical and regulatory barriers of AI integration in healthcare. As a data scientist and strategist in medical AI, Cal offers his unique perspective in this area, offering practical solutions to these challenges. Parliament’s acceptance of the EU AI Act is a major milestone for AI regulation around the world . Like the General Data Protection Regulation (GDPR), the EU AI Act is expected to have a Brussels-like effect, becoming a global standard for AI regulation. Yet based on some of the conversations I have had at health tech conferences this year, it seems like a number of institutions are unphased by this development. If we aren’t deploying high-risk AI systems , why does it matter? Every industry must navigate the ethical challenges that accompany AI design, but the healthcare industry faces even higher risks. And whether you are working on a sensitive system (think: AI and medical devices) or not, the EU AI Act and other laws like Canada’s Artificial Intelligence & Data Act make the progress of AI and medicine imminently connected to our ability to manage these risks . The challenge? Ethical AI is a sociotechnical problem. Part of ethical AI development is technically driven: we need tools to detect bias and other issues in datasets. The other part is human-driven: deciding what is fair and when, with respect to cultural norms. Because AI ethics sits at the intersection of these two needs, it is nearly impossible to find a one-size-fits-all solution to overcoming the ethical and regulatory barriers to AI in healthcare. As a result, I am sharing some of my best recommendations that, when combined, lead to more effective and responsible AI in healthcare.

Top four barriers to AI in healthcare

Given the complexity of AI and the level of risk in the healthcare industry, healthcare institutions must navigate a number of regulatory barriers before successfully deploying safe machine learning (ML) models. Here are a few of the biggest barriers to AI in healthcare and how I would recommend addressing them.

1. The tradeoff between complexity and ground truth

The algorithms and ML models that we are designing today have enormous applications in healthcare .

- Clinical images, including X-rays, CT scans, and MRIs, can be quickly read and accurately interpreted by AI trained to identify abnormalities.

- Predictive AI can suggest appropriate care management strategies that account for the likelihood of a patient’s readmission and future risk factors.

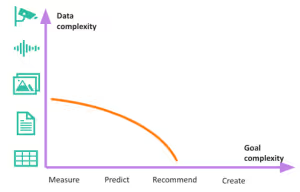

But with the natural complexity of healthcare data (doctor notes, labs, imaging data) and increasing complexity of project goals, it becomes much harder to define ground truth* and conduct ML observability . In ML, ground truth refers our ability to know the correct answer to a prediction problem. With relatively simple goals, like predicting whether a patient will be readmitted within 30 days, we will have a chance 30 days later to observe what actually happened. As goals get more complex, like recommending amongst a set of items or summarizing clinical notes, it is harder to define ground truth since many equally correct answers will not be observed. The figure below depicts types of data, including spreadsheets, documents, photos, audio, and video, and common AI goals, including measure, predict, recommend, and create. As you move along either axis, both the level of complexity and risk of bias increase. For example, it is much simpler to design and test an ML model that measures results based on spreadsheets. It is much more difficult to determine ground truth for a model that generates multimedia content.

When we are unable to determine ground truth, it becomes difficult to detect unexpected outputs—ultimately leading to less trust in the model. When practitioners and other users trust AI, they are more likely to use it and rely on its outputs, which can help drive innovation, efficiency and productivity. Trust in AI can also help organizations avoid negative outcomes, such as biases, errors and unintended consequences. How to address this: incorporate a mandatory risk assessment of any AI project that includes some grading of the complexity of the goal and underlying data. The AI Risk Management Framework from NIST is one example of this . I would also recommend developing an observability practice as ML solutions get more complex. Both of these actions will help you clarify which AI projects need extra scrutiny and establish controls for how often solutions must be evaluated. *In machine learning, ground truth refers to our ability to know the correct answer to a prediction problem. With relatively simple goals, like predicting whether a patient will be readmitted within 30 days, we'll have a chance 30 days later to observe what actually happened. As goals get more complex, like recommending amongst a set of items or summarizing clinical notes, it's harder to define ground truth since many equally correct answers won't be observed.

2. Algorithmic bias mitigation

If the headlines have taught us anything, it is that AI and ML algorithms are not foolproof. Unwelcome negative bias permeates even the best AI systems, making it a major regulatory hurdle for healthcare AI. Researchers from the University of Cambridge (England) found that 55 of the 62 clinical AI studies they systematically reviewed were guilty of “high risk of bias in at least one domain” . In another study, researchers at the University of Chicago Medicine (IL, USA) found that Black patients were 2.54 times as likely to have at least one negative descriptor in their records compared to white patients Studies like these shed light on areas of healthcare that are easily infiltrated by unconscious bias—bias that will be passed on to models through training data. As our cost to compute (computing costs of training and running AI workloads) goes down and our ability to work with multimodal data like clinical notes and medical images goes up, it becomes easier to introduces such biases into models . How to address this: focus your efforts on stress testing. As models advance in complexity, stress testing becomes increasingly important. Just like aerospace engineers test plane wings under extreme circumstances, AI builders must spend time designing the right stress tests or scenarios to understand where AI models might fail, then clearly communicate these potential risks to the healthcare institutions using these systems .

3. Safety, transparency and heightened need for data governance

As open source tools have exploded, there are a growing number of pre-trained models available for use, especially large language models . In many cases we think about transparency as understanding why a model is making the predictions that it is making. However, when dealing with more complex models like neural networks, especially ones that deal with text and images, explainability suffers. Our next best alternative for now is to understand what went into these models and how they reach decisions. Algorithmic transparency is a key concern in many AI legal discussions, especially in high-risk environments like healthcare. Less transparency makes it challenging to pinpoint the source of issues like negative bias or harmful outputs, and greatly restricts our ability to assign blame and take responsibility for decision-making . How to address this: check for model cards . Model cards accompany models and include key information like intended uses for the model, how the model works, and how the model performs in different situations. They are similar to the ingredients and nutritional facts on the back of food labels or drug sheets. Not only do they provide information about an AI model’s performance, potential biases and limitations, but they are also designed to increase transparency and accountability. Increasingly responsible data science teams select between models as a function of the availability, completeness and accuracy of their model cards. This is an emerging best practice internally when publishing solutions that use a home-grown model. Equally important are data cards . When curating AI datasets, document answers to the following questions:

- Where did it originate?

- Who owns it?

- What biases or anomalies exist within it?

- Who is represented?

- Who is missing?

4. AI literacy

When AI is introduced to the mix, the traditional informed consent process becomes more complicated. Healthcare providers are now faced with questions like:

- When should we tell patients that we are using AI for diagnostic and/or treatment purposes?

- How much detail should we disclose?

- What are the best ways to explain the complexities of AI in understandable ways?

These questions are especially challenging to answer when healthcare teams have low AI literacy. AI literacy involves having a basic understanding of AI technologies and how they work. It also includes understanding the potential impact of AI on society, industries and jobs. Oftentimes, users have unrealistic expectations for, and low trust in, AI because they do not fully understand its capabilities. As an example, we worked with a health technology client to identify patients for insurance coverage. We found that the model was successful at identifying borderline cases, ones that the team probably would not have considered. Initially, the team rejected the model because they did not trust it to make accurate decisions. However, after educating the team on how the model was arriving at decisions and why, we immediately garnered more buy-in and revenue growth—without ever making changes to the original model. How to address this: hosting AI training and workshops, and turning to other resources like Andrew Ng’s prompt engineering course and Cassie Kozyrkov’s Making Friends with Machine Learning series are great ways to improve AI literacy . I have also observed that the most mature organizations practicing AI literacy today are ones that have cross-functional AI safety teams—comprised of professionals like data scientists, lawyers and clinicians—to review new and ongoing projects for potential risks and to discuss emerging regulation.

What can healthcare AI builders and users prepare today?

One thing is clear, AI is on its way to healthcare, and we know it will soon be regulated. We are still in the early days of AI regulation, which means we are likely to see lots of changes in particular rules as the industry and society collectively learns. It is most important to start with the basics:

- Invest in the AI literacy of your workforce.

- Create an AI safety review team.

- Adopt an AI policy to define levels of risk and risk management controls.

Regulation is quickly approaching. While we do not know what the final versions of these laws will look like, there is a general consensus around model transparency, data governance, and testing the safety and fairness of models. Disclaimers:The opinions expressed in this feature are those of the interviewee/author and do not necessarily reflect the views of Future Medicine AI Hub or Future Science Group.About the Author: Cal Al-Dhubaib is a globally recognized data scientist and AI strategist in trustworthy artificial intelligence, as well as the founder and CEO of Pandata, a Cleveland-based AI consultancy, design, and development firm.

.png)

.png)

.png)