Why AI in radiology failed

Why AI in radiology failed

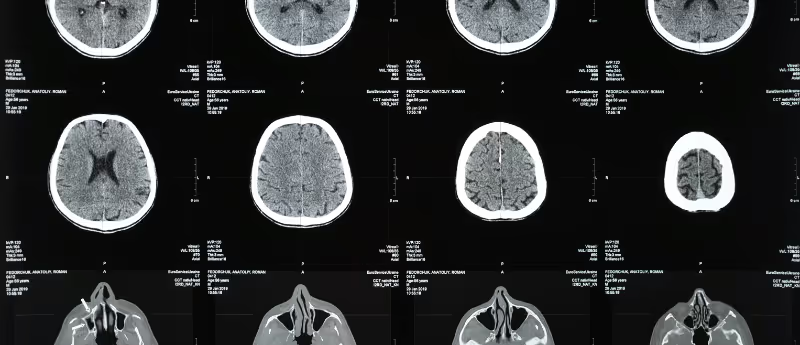

In this opinion piece, Andrea Isoni (AI Technologies; London, UK), author of Machine Learning for the Web, provides his outlook on why applications of AI in radiology have been lacking and his thoughts on how the field will evolve in the future. It was around 2018 when pressing news came that AI would soon be able to replace radiologists (mostly led by the publicized IBM Watson, but not solely). Doctors themselves dropped studying radiology as a specialization in mass. In this case, AI failed. And we may now have an actual shortage of radiologists. Today AI diagnostic accuracy scores are approximately 65%, compared to an 85% average for a radiologist . What happened? First, as outlined above, the accuracy is (rightfully) calculated based on diagnosis, not just images. A radiologist today provides a diagnosis not just based on the radiographic image alone, but based on data and the general condition of the patient, which are not really considered by the AI model. Second, both radiographic images and metadata are taken from different manufacturers, meaning they are not standardized and sometimes labels are not as strictly consistent as they should be. The AI model has been trained with heterogeneous data and unsurprisingly, the accuracy could therefore be questioned and improved upon. The case moving forwards Overtime, the second point above regarding accuracy will be solved. Maybe in 5 years, or maybe in 10. It would depend how quickly data can be standardized and become 'AI friendly': the ease at which radiographic images and their metadata can be uniformly ingested into AI training. Manufacturers may or may not have the right incentives to do so. The question remaining to be tackled: how can an AI model make a fully informed diagnosis by incorporating different sources of patient data together, including for example, data from physical examinations, and combining them with radiographic images? Combining these data sources may return various scenarios that may not have evenly distributed data, so the model cannot actually replace the radiologist. In other words, until we have standardized data of patients with good statistics for different cases (to cope with the variability of scenarios) it may be difficult for an AI model to perform as well as a human. My guess of the future In the next 5 years, if there is an economic incentive, it may be possible to make a specialized AI model just for certain diseases but not for general conditions (for example an AI model for cancers or broken bones). Radiologists will always be needed, and their jobs will just change over time, not disappear. A human today can ingest and generalize across non-standardized heterogeneous data, in a way that we need to still develop in AI. If we do manage to create this ability in AI, then AI radiologists might be around soon.

.png)

.png)

.png)