London Biotechnology Show 2025

The London Biotechnology Show brought together industry, academic, and regulatory experts to discuss the most exciting updates in the biotech space and, critically, the biggest gaps that need filling as the landscape unfolds.

Key takeaways:

- Expanding lab space is essential for London’s growth over the next decade.

- Cyber security cannot be taken as a luxury — it has to be embedded.

- Scientists are “barking at the wrong tree” by overemphasizing Large Language Models’ place in healthcare; multimodal integration is an essential next step.

- AI is being integrated into clinical trial practice, with the UK sovereign regulator of medicine and medical advice saving hours of time spent pouring through trial regulatory documents by using two bespoke models.

Howard Dawber | Why London Is Pioneering the AI Revolution

Opening the day was Howard Dawber, Deputy Mayor for Business and Chair of London and Partners. He highlighted London’s prime position as an innovator in the health and life sciences space — a sentiment that was echoed by Nvidia CEO Jensen Huang during London Tech Week two weeks prior.

According to Huang, the UK’s AI talent is the “envy of the world.” It’s a country increasingly recognized as a global AI hub, attracting a flurry of tech investments and partnerships with top UK institutions — including NVIDIA’s own initiatives, like opening a new AI training center to upskill the UK.

Basking in the “golden glow” of London Tech Week, Dawber draws on the £1.4 billion of venture capital investment into London’s life science businesses as a “powerful affirmation of the city’s continued growth and potential in AI.”

This $1.4bn is feeding London’s vibrant start-up community — dotted with ~2,400 life science startups and scale-ups, London has more in one city than in France and Germany combined.

And these companies tend to “fly,” in a city considered Europe’s optimal breeding ground for businesses. In the last five years, Europe has raised six life science unicorns — that is, startups valued at $1bn or more. And three of these are located in London, putting it ahead of every other European city.

But life science commerce doesn’t thrive without research institutions; it’s laboratories where breakthroughs are made.

At the moment, London holds one million square feet of lab space. At the end of next year, this will have doubled to two million - but, by 2032, lab space will expand over seven-fold, to 7.2 million square feet.

That would place the UK capital among the top five cities with the biggest area of lab space and - again - dominating every other city in Europe. It’s an impressive feat, given the country’s small size.

Such an initiative would support the Mayor of London’s Growth Plan, published in February, that outlined plans to restore productivity growth 2% year-on-year over the next decade. If reached, this would add £107bn to the London economy, and give its inhabitants ~£11,000 extra pre-tax income - something that would reportedly “transform the UK economy and the lives of Londoners.”

Health and life sciences sit at the heart of this growth trajectory. London’s top universities - along with institutions like the Alan Turing Institute and the Francis Crick Institute - provide the research “fuel” powering biotechnological innovation. And it’s this innovation that continues to attract global investment.

Dawber concludes the talk:

“We’re now transitioning away from a city that’s very good at taking advantage of developments that have been innovated elsewhere to a frontier creator of breakthrough technological solutions.”

Professor Chris Johnson | Cyber Safety Cannot Sit on the Back Burner

Professor Chris Johnson, Chief Scientific Adviser in the Department for Science, Innovation and Technology, discussed one of the biggest fears circulating in the AI community right now: Cyber security.

After news broke of the first patient death linked to a cyber attack, data protection concerns are mounting. And with AI research and implementation consistently making headlines, Chris rallies for more protective armour for life science industries building AI systems across the country.

He opens by addressing a big issue with antivirus software: it checks for the presence of bugs — not the absence. From a cybersecurity perspective, this means that a programme will identify how many viruses it recognizes, but fails to flag any viruses that it doesn’t.

“It’s reached the point where some antiviral products have been compromised and have actually inserted the virus in your software,” says Johnson. “So in that context, how can you really be confident at any point in the integrity of your data?”

The “positive side,” he notes, is the UK’s vibrant cybersecurity industry. It’s now worth ~£13.2 billion and populated by 67,000 workers. It’s also grown by 12% in the last year, a “really startling figure,” Johnson says.

But despite the growth, awareness remains generally low — especially in life sciences. Over the past month visiting different life science companies, Johnson called the knowledge of laboratories’ technical staff “not good.”

“Very few of them understand the impact of installing software updates,” he says. These updates help to improve stability of devices, update intrinsic protective software, and fix security vulnerabilities that keep data safe. As such, while they may seem redundant, they provide an important shield against potential cyber attacks.

He continues:

“Very often, the procurement of the life sciences companies make no mention of cyber security, or they make bad statements such as “shall have cyber security” without any form of measurement to determine if that requirement is met.”

Honing in on AI, machine learning is also prone to deliberate data poisoning, which Johnson acknowledges as “an increasing issue.” Medical LLMs are especially vulnerable: one paper, published in Nature at the start of the year, found that replacing just 0.001% of training data with medical misinformation resulted in “harmful” models that were more likely to propagate medical errors.

This makes cyber security cornerstone to effective AI implementation — especially for healthcare.

How do companies make sure this need is met? Johnson emphasizes contingency planning, appointing cyber security experts to risk and audit committees, keeping software up-to-date, and ensuring technical staff are aware of proper cyber safety practices.

“Cyber security really is the bedrock of confidence,” he concludes. “It’s this confidence that lets us innovate, to integrate our systems, to store and retrieve and analyze new forms of genetic data, new forms of synthetic biology.”

“If we can protect our intellectual property at a national level, then the future growth of this country and the countries that we trade with will be significantly globalized.”

Thomas Balkizas | Is AI Hitting a Wall?

Thomas Balkizas, Senior Director of Life Sciences at Microsoft, spoke about the challenges tethered to AI in healthcare specifically, specifically highlighting (1) the issues with multimodal data integration, and (2) exclusively focusing on LLMs.

He starts by drawing attention to four emerging technologies:

- GenAI, i.e., ChatGPT and CoPilot.

- “Novel AI models” — ones that are being tailored to fit a niche. In healthcare, novel AI LLMs are performing significantly better in medical examinations than they used to — even without fine tuning.

- A map towards quantum computing — although it mostly poses a commercial advantage for more efficient compute, Thomas also nods to a scientific advantage, with quantum systems able to “drive AI to new heights” and achieve unprecedented performance.

- Unified data estate — a centralized, integrated infrastructure for managing all types of data (structured, unstructured, real-time, etc.) across an organization in a consistent, secure, and accessible way.

“Despite all of these developments in AI and data science, scientists today are still met with challenges,” says Thomas.

Notably, they don’t integrate with anything else: they’re bespoke, built with specific needs in mind. This limits what an artificial system understands about the world — leaning away from the “world model” that could emulate human reasoning more closely.

But there’s another issue: the “awe” of LLMs.

While LLMs have certainly been instrumental in AI’s progress, especially in terms of public engagement, their pareidolic popularity tends to over-inflate their capabilities — more simply put, because they “talk” like a human would, we overestimate how intelligent they really are. This means a lot of developers hedge their bets on LLMs, even if they’re not the best AI application in every case.

Healthcare is one such case, with only a small fraction of health data taking the written form — the tokens that LLMs “deal” with.

“A lot of healthcare data is not text-based,” says Thomas. “And that presents us with a challenge. We have less publicly available images, for instance, to train models, so they struggle in these contexts.”

He then poses a question: AI models are achieving high performance in testing, so why isn’t there a higher take-up in the real-world?

One key aspect to consider is that this “high performance” is achieved from sitting a multiple choice exam, as a medical student might. These are textbook scenarios, argues Thomas — ones that don’t reflect the nuances of real clinical cases.

“Doctors are actually presented with complex probabilities, not necessarily in pathology work. These [complex probabilities] can be secluded in imaging, genomic, quantum data.”

He describes the data as “dark” — it might be hidden in activity monitors, in smart watches, or in medical records. Shedding light on this data, using multimodal AI systems, could bridge the gap between research and clinical application. A gap that, if closed, could “help researchers find a needle in a haystack,” Thomas concludes.

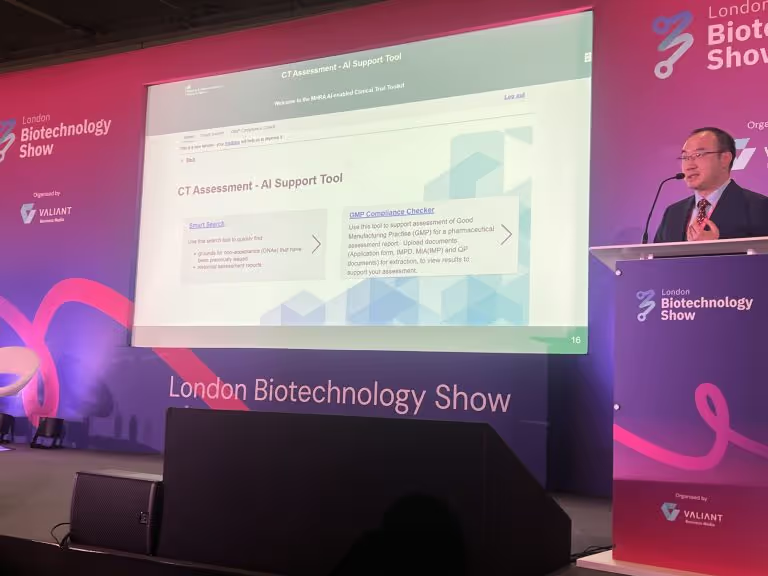

Dr Kingyin Lee | Modernizing Clinical Trial Oversight: MHRA’s AI Approach

Dr Kingyin Lee, Head of Clinical Trials at the Medicines and Healthcare products Regulatory Agency (MHRA), discussed how the UK’s sovereign regulator of medicine and medical advice is integrating AI into clinical trial practices.

Lee starts by acknowledging Dawber’s earlier speech: “As [Dawber] mentioned, we are developing into a proportionate and flexible regulator, helping to develop the UK as a leading destination for innovators.”

But, instead of pedestalling London as a lively hub for technological solutions, Lee emphasizes that the UK retains the interests of patients and their safety as top priority. “We’re building our reputation to be agile and effective,” he says.

From a clinical perspective, the MHRA gets through an average of 5,000 trials a year — each with “hundreds and hundreds” of pages to review, making sure that “the information is all there and sound,” says Lee.

Finding all of the pertinent information is a time-consuming process that could take hours — one that the MHRA spotted a potential for AI to help with.

The MHRA, embracing this potential, has developed two AI tools:

- AI-Airlock Sandbox. This Good Manufacturing Practice (GMP) checker is helping pharmaceutical assessors judge the GMP aspects of clinical trials — things like quality assurance and traceability on manufacturing processes.

- The Grounds for Non-Acceptance (GNA) assessor. GNAs are issues flagged with a trial — things like missing quality documents or gaps in eligibility criteria — that must be addressed before the trial can be approved.

- Around half of all clinical trial applications receive at least one GNA, which are typically fixed by following guidance or justification. The AI assessor, in this case, looks at a “treasure trove” of comments left by assessors from over 110,000 prior trials, identifying the nuances between these comments and GNA approval.

“Our assessment team might take hours to review the complexity design of a trial with many different patient groups. This can be cut down to literally a few minutes — it’s incredible,” says Lee.

He adds that, while the tools aren’t ready just yet, the MHRA will be able to test them directly and see how they work by the end of June.

But Lee recognizes that AI can’t do everything they need, pointing to both time and funding constraints. He instead describes it as a modular tool, though “it seems to work very well” for what the MHRA needs — at least for the time being.

He finishes by looking into the “crystal ball” of the intersection between AI and the MHRA, albeit loosely:

“There are projects that we can visualize, and the research we’ve used for two tools could be utilized for new technologies. But we need to make sure that there is still a human in the loop — this is the position we have in clinical trials.”

He continues:

“While generative AI is great, it’s essential to know that we know what we’re doing, when we’re doing it, and enhance the MHRA’s capability to assess clinical trials while not drifting from what is acceptable.”

You can find our reflections on the event below:

Expert Insight on the Future of Healthcare

.png)

.png)

.png)